In a nutshell

- 🔥 Celebrities reignite debate over whether social media is to blame for controversies, citing context collapse and algorithmic outrage that amplifies missteps.

- 🧠 Platforms act as attention engines; design choices like recommendation systems compress nuance and reward emotional salience, accelerating pile-ons and misinformation.

- ⚖️ The controversy hinges on shared responsibility: celebrities choose what to post, platforms shape spread, audiences decide amplification—calling for slower publishing and product friction.

- 🏛️ UK lens: the Online Safety Act and Ofcom oversight push risk assessments, transparency, and child protection while balancing free expression and creator discoverability.

- 🧭 Path forward blends algorithmic transparency, friction for high-risk content, and media literacy to curb harm without dulling culture.

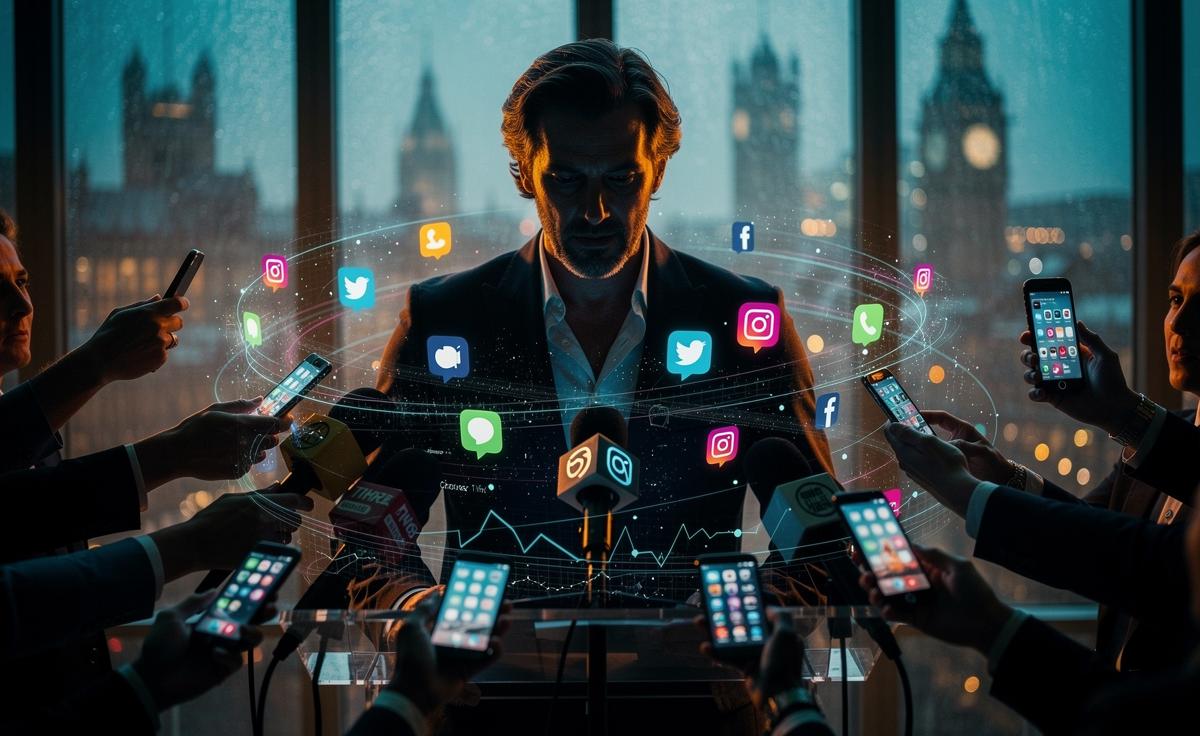

Another week, another storm in the culture weather system. A fresh wave of celebrity statements has reignited the argument over whether we should blame social media for incendiary discourse, mental strain, and the erosion of nuance. Some stars say platforms are a megaphone without a conscience. Others counter that audiences, managers, and media all feed the fire. The truth, as usual, is harder to headline. In the UK, where debate meets regulation and public pressure, the contours feel especially sharp. The question is not whether social media matters, but whether it is the prime mover of modern controversy—or merely the stage.

What Triggered the Latest Backlash

A misjudged post. A clipped video. A quick apology that convinced few. The recipe is grimly familiar, and it has brought a string of celebrities to the microphone this week to insist that algorithmic outrage is running the show. Some argue their words were stripped of context, accelerated by feeds that reward heat over light. Others admit missteps yet insist the online reaction turned critique into spectacle. What begins as disagreement can mutate into identity warfare within hours.

There is also self-interest at play. Public figures now live in perpetual campaign mode; their bottom lines are tethered to visibility, endorsements, and platform performance. PR teams push “authenticity” while eyeing the analytics dashboard. Fans become activists, then auditors. Detractors assemble dossiers. The atmosphere invites dramatic pivots—from “speak your truth” to “this is not what I meant”—as performers navigate a constant risk calculus. Visibility is currency, but it is also liability.

| Celebrity | Position | Focus | Platforms Mentioned |

|---|---|---|---|

| Jameela Jamil | Critical of virality | Health misinformation, pile-ons | Instagram, X |

| Stormzy | Balance and restraint | Context loss, creative freedom | YouTube, TikTok |

| Selena Gomez | Mental health emphasis | Breaks from platforms | Instagram, TikTok |

| Ed Sheeran | Minimal posting | Direct fan connection | Instagram, YouTube |

The thread tying these perspectives together is not denial of responsibility but vigilance about the incentive structure. The system prizes engagement above truth, and celebrities sit in the blast radius.

How Platforms Shape the Firestorm

Platforms are not neutral pipes. They are attention engines. Recommendation systems personalise feeds, but they also compress complex discourse into snackable, shareable fragments. The mechanics are subtle: a slight tweak to ranking can push conflict to the top, priming users to react before they reflect. Once a clip sticks, it spawns commentary, duets, stitches, debunks, meta-debunks. Context collapses, and the loudest reading wins.

The architecture also blurs boundaries between fans and critics, journalists and influencers, statements and rumours. In this arena, performative authenticity thrives, because the metrics reward emotional salience. That doesn’t absolve famous voices who misinform, nor does it nullify the agency of audiences. Yet the playing field is tilted. Brands, creators, and media compete inside the same casino of attention where a single hot take can outweigh a year of careful work. Design choices are editorial choices by another name.

The consequence is a cycle: controversy spikes, moderation lags, clarity arrives late—if at all. The public is left holding fragments, and fragments invite projection. Speed defeats nuance, again.

Personal Responsibility Versus Platform Power

There is an easy refuge in blaming the feed. There is an easy sneer in blaming the famous. Both miss the complexity. Celebrities control what they publish; platforms control how it spreads; audiences control whether they amplify. The labels “victim” and “villain” rarely fit cleanly. The question is not who is guilty, but how to distribute responsibility across the stack.

Consider the toolkit now standard in entertainment: social media managers, scheduled drops, coordinated cross-posts, data-led messaging. This is professionalised persuasion. When it misfires, cries of “taken out of context” can ring hollow. Yet we should also recognise the asymmetry: a poorly phrased comment can balloon into a parasocial referendum where strangers adjudicate a person’s character within minutes. That’s not accountability; that’s a lottery with reputational stakes. Everyone plays; few understand the odds.

A healthier norm requires two moves at once. Public figures should adopt slower publishing habits—draft, delay, sanity-check. Platforms should throttle velocity for high-risk content, add friction to sharing, and foreground corrections as prominently as claims. Restraint is not censorship; it is design for better speech.

Where Policy and Profit Intersect

In the UK, the Online Safety Act sets expectations for platforms to assess risks, protect children, and tackle illegal harms, with Ofcom guiding enforcement. That framework matters when celebrity controversies blend with harmful pile-ons, targeted harassment, or monetised misinformation. Yet regulation navigates a tightrope: it must deter systemic negligence without chilling legitimate expression, satire, or criticism. Rules work when they target processes, not opinions.

Money shapes the battlefield. Advertisers hate brand adjacency to chaos, nudging platforms to upgrade moderation and transparency reporting. Creators, meanwhile, depend on discoverability and fear throttling disguised as safety. The compromise is overdue: clearer algorithmic transparency, appeal pathways, origin labels for edited media, and auditable risk assessments that measure not just removal counts but reduction of harm. Sunlight is not enough without metrics that bite.

There is also a cultural lever. Media literacy—taught early, refreshed often—arms users to decode engagement bait and recognise context collapse. Coupled with product friction, it shifts incentives from performative outrage to durable trust. Regulation without literacy fixes symptoms; literacy without regulation ignores power.

So, is social media to blame? Partly. It accelerates. It distorts. It rewards the wrong things. Yet the celebrity economy also courts attention, and audiences often demand drama more than dialogue. The smarter question is practical: which changes—at the level of design, behaviour, and policy—will reduce harm without dulling culture? The next controversy is already loading; the blueprint we choose now will decide its shape. What would you change first: the rules, the algorithms, or the way we all participate?

Did you like it?4.6/5 (24)